#300 — April 10, 2019 |

Postgres Weekly |

|

|

How To Improve The Performance of Laurenz Albe |

|

When A Vulnerability is Not a Vulnerability — Sometimes intentional features can get recorded as ‘vulnerabilities’ for the record, such as with CVE-2019-9193 and Postgres’s Magnus Hagander |

Real-Time Postgres Performance Monitoring — Collect out-of-the-box and custom PostgreSQL metrics and correlate them with data from your distributed infrastructure, applications, and logs. Gain real-time performance insights and set intelligent alerts with Datadog. Start a free trial. Datadog sponsor |

|

PGCon 2019 Registration Now Open — This year the popular Postgres conference is taking place in Ottawa, Canada across May 28-31 including two days of tutorials, two days of talks, and an unconference. PGCon |

|

pgmetrics 1.6.2: Collect and Display Stats from a Running Postgres Server — The homepage has a lot more info including example output. RapidLoop |

|

Controlling Postgres' Planner with Explicit Postgres Documentation |

|

Why Richard Yen |

|

Continuous Replication From a Legacy Postgres Version to a Newer Version Using Slony — Native streaming replication in PostgreSQL works only between servers running the same major version, but Slony provides an application-level alternative. Nickolay Ihalainen |

|

|

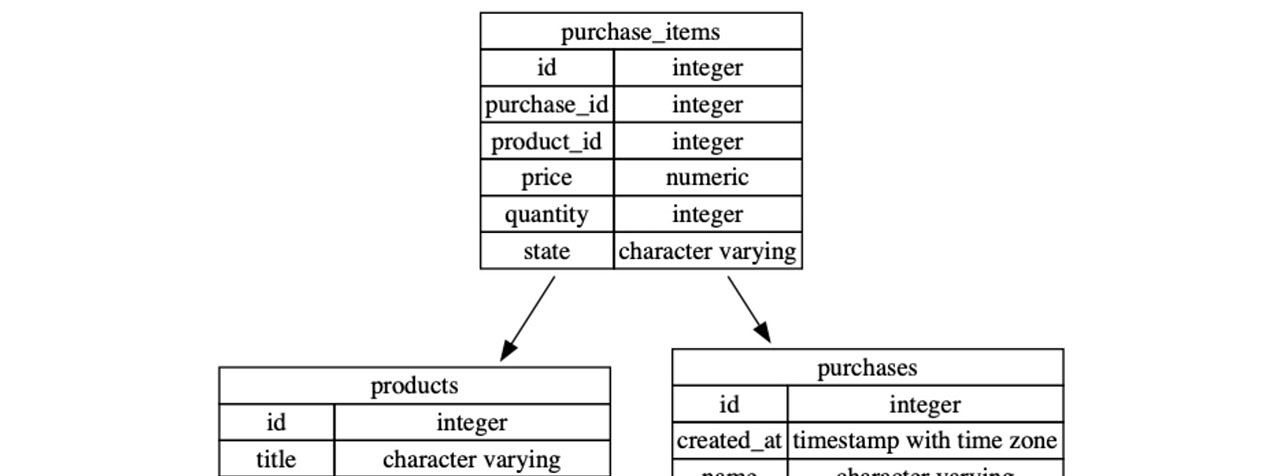

dbdot: Generate DOT Descriptions for Postgres Schemas — Essentially another way to create diagrams of your database schema. Aashish Karki |

|

How CockroachDB Brought JSONB to Their Distributed Database — ...plus why they went with Postgres syntax. Cockroach Labs sponsor |

|

vipsql: A Vim Plugin for Interacting with 'psql' — Version 2.0 came out just a couple of weeks ago. Martin Gammelsæter |

|

|